How the Index encourages transparency

How does the Aid Transparency Index encourage transparency? The recently launched 2020 Aid Transparency Index illustrates the balance needed between competition and co-operation for driving transparency. As a tool the Index is quite simple, we encourage the world’s major aid organisations to be more transparent by scoring and ranking their published aid data. Since the first full Index in 2013 aid transparency has been increasing, and there is compelling evidence to suggest that the Index has played a part in this – but how does it work?

Why rank aid agencies?

Ranking indices have increasingly become a popular and effective tool for accountability and advocacy within governments and businesses. Examples include University league tables, the World Bank’s Doing Business ranking and global credit ratings. According to two Columbia University political scientists, Cooley & Snyder, rankings fulfil four main functions: judging quality, advocating for change, monitoring and establishing norms and conventions. At Publish What You Fund we have found that our scoring process, as set out in our research methodology, allows us to standardise the assessment of aid transparency in order to make comparisons easier.

Methodology

The Aid Transparency Index uses 35 indicators grouped into five components – from financial data to performance outcomes and project attributes. We assess both the machine-readable International Aid Transparency Initiative (IATI) data and any other data published on an organisation’s website which meet our current tests. A key part of the Index is the active engagement we have with organisations through feedback, quality checks and the use of an independent reviewer during the scoring process.

The data is assessed for quality and comprehensiveness in two stages. First, the IATI data is run through Publish What You Fund’s software – the Aid Transparency Tracker – to produce an initial score. For the 2020 index we ran this assessment three times in total (in December 2019, February and March 2020) at each stage providing feedback so organisations can improve their data before the final scores are calculated.

Not all aid information is published as IATI data however, so we also conduct a manual search and assessment following a more detailed process as described in our 2020 Technical Paper. This manual search is conducted twice along with an independent review process by CSOs to ensure objectivity in the final results.

Second, quality checks are conducted by Publish What You Fund staff. The purpose is to ensure that the quality of information published for these indicators is what it should be. These are conducted twice: first, as part of the initial assessment and second, at the end of data collection. Again, the results of the first round are shared with each organisation giving them the chance to fix any issues.

The Aid Transparency Index Process

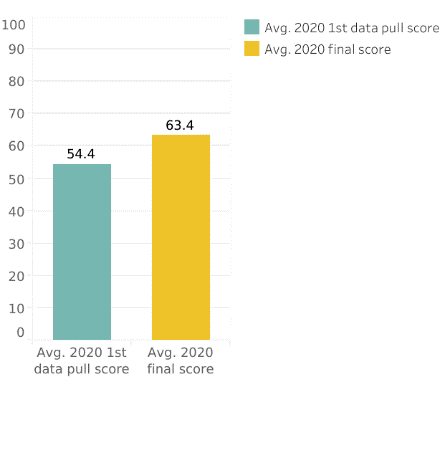

So how do we know our ranking is driving behaviour? As with any well-planned research project we take a measure of the transparency scores at the start and end of the process.

As the chart shows, overall the average transparency score increased by 9 points across the 47 organisations in the 2020 Index between the start and end of the scoring process. We also observed that those donors that engaged with our feedback were more likely to receive higher scores.

This translates into better quality data being published online and to the IATI Registry.

Engagement

The whole process allows organisations multiple chances to respond to comments and update their data, to understand how they are being assessed and to publish more, better quality data. The Index utilises all of the functions of ranking and indices as outlined by Cooley & Snyder.

During the sampling we judge the data quality. For example, the in-country locations of development projects were found to be missing for several organisations or there was a mis-match between the geo-location given and the project descriptions which we are able to provide feedback on.

We also set norms and standards by providing guidance on good publication. For example, one donor organisation updated their Information Disclosure Policy in line with the assessment criteria used for the index, in time for the final index scoring.

The Index also advocates for better publication, particularly of performance data by adding a greater weighting to this component. Since 2018 we have noticed that there has been a slight increase in quality and quantity of performance data, such as reviews and evaluations, being published. Finally, we also monitor the regular publication of key documentation such as annual reports and audits. For example, we check the last publication dates and provide feedback if the most up-to-date versions were not found. Each of these functions – judging quality, setting norms and standards, advocating and monitoring – contributes to the average increase in scores shown.

Only after the feedback and engagement can a final transparency score for each organisation be calculated. Publish What You Fund then writes a report for each organisation included in the Index, providing them an overview of how their score was calculated with commendations and recommendations for further actions to support their transparency journey. For the 47 organisations included in the 2020 Index these are available here.

Conclusion

In conclusion, we have been able to measure a positive change in scores during the Index process. Organisations often comment that the engagement and feedback we provide on their data is very useful and we have observed that those organisations that engage more in the process reap greater rewards in their transparency scores. Ultimately, the cooperative process of technical engagement and communication during the index data collection encourages greater transparency, which is also in line with Honig and Weaver’s findings that aid agency elites drive transparency from within, more than external factors. The Index process is not always effective at driving improvement in transparency as there can be technical or policy limitations that mean organisations are not able to respond to our feedback, but we know we have found a good formula to measure and monitor aid transparency and to drive progress forward through cooperative learning and communication.