Sampling for Sweetness – Part II

“Sampling is like a box of chocolates. You never know what you’re gonna get.”

This is how the team here at Publish What You Fund felt when the data collection period for our 2018 Aid Transparency Index ended on the 9th of March. It was again time to put on our data user hats and to conduct the second (and final) round of IATI data sampling and manual checks. Since it is such an essential part of our Index methodology, we shared our experiences of the first round in another blog post.

What do we do when we sample?

Although much of our Index work is automated, 16 out of our 35 indicators require additional verification. As explained in our Technical paper, IATI data sampling and manual checks come after the Aid Transparency Tracker has automatically collected data made available by donors to IATI. We take samples to make sure that the information within an organisation’s IATI file is of consistently high quality and therefore useful. This means the information has to be current; it should meet the definition of the indicator and it should be published according to the IATI Standard. For example, our Aid Transparency Tracker checks if text is provided for project titles and descriptions. What it can’t do – and why human sampling is critical – is test if the text actually makes sense.

This year we increased the number of samples per indicator. With more data published to the IATI Registry, we wanted to make the Index sampling and checks more rigorous.

We estimated that within the timespan of a month, combining the first and second rounds of sampling, the team –seven sets of eyes at full capacity- checked around 18,900 individual pieces of information to validate the quality. I’m sure you would agree this is no small feat for a relatively small NGO like us!

What happens between rounds 1 and 2?

After the first round of sampling and in response to us sharing the outcomes with donors, we were pleased to see many of them getting in touch with us about their performance and asking for recommendations on how to do better.

Now it was up to them to improve their data until the end of data collection. We were very curious about the second round of sampling. Would we see the expected and hoped for improvements?

And as it turned out, that second box of sampling contained a lot of sweet and delicious chocolates! Two very positive developments had taken place:

- Donors improved the comprehensiveness of their publication, publishing comparable data on more indicators between the first and second round.

Between January and March, donors provided more information in the IATI format for 14 of the 16 sampled indicators. For example, 12 more donors published an organisation strategy and six of them added a procurement policy to their IATI publication. An extra 9% of donors published tenders as well as impact appraisals and 11% more donors made project budget documents available in the IATI Standard – we were happy to see this improvement among the least published indicators overall. - Donors improved the quality of the information published in response to round one, as more indicators passed in the second round of sampling.

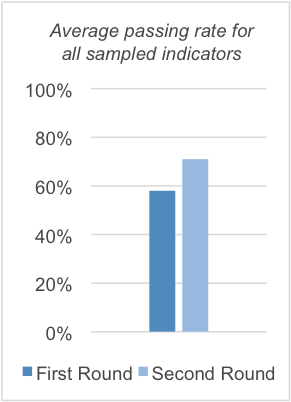

We were particularly pleased to see an improvement to the quality of data published to the IATI Registry, directly in response to the feedback we shared with donors in January. On average, indicators passing sampling went from 58% to 71% between the first and second rounds. Significant progress was made with regards to the allocation policy and organisation strategy indicators, for which 45% and 57% more donors provided information that met our criteria. Additionally, the basics improved: 17% more donors passed descriptions and 14% more donors passed titles in the second round.

All of this is excellent news, as we know that the more comprehensive and the higher quality the data provided by donors, the more useful it is to be reused internally by other departments and country offices but also critically by partner governments and civil society organisations.

All of this is excellent news, as we know that the more comprehensive and the higher quality the data provided by donors, the more useful it is to be reused internally by other departments and country offices but also critically by partner governments and civil society organisations.

However, problems persist. Some donors repeated the data mistakes we discovered during the first sampling round: not getting the basics right, for example not entering understandable titles and descriptions; publishing “noisy” data due to unclear and inconsistent documentation; failing to provide current information. These problems are not limited to the donors ranked ‘very poor’ and ‘poor’ but also apply to some of the top performers.

What can we take away from this?

The Aid Transparency Index process works. Significant progress was made between the first sampling round back in January and the end of data collection beginning of March. The looming end of data collection made donors ambitiously work on both the quantity and the quality of their data.

It is not just the Index as a tool in itself but it is also the interactive data collection process which helps create these positive changes. Engaging with donors on a regular basis, keeping them updated on their assessment and answering their questions is creating a positive feedback loop from which everyone benefits: donors improve their transparency levels, Publish What You Fund are happy about progress and most of all, data users are able to take advantage of more and higher quality data.

However, as the 2018 Index results indicate, the job is not done. In order to advocate for better data quality beyond the Index process, we have informed donors about their performances and any data issues in the second sampling round.

We encourage donors to keep up the momentum regardless of Index cycles and to keep on improving and regularly updating their data. Index and non-Index donors can assess the quality of their IATI data at any point using our free, open source Data Quality Tester.

At Publish What You Fund, we believe donors should take responsibility for the data they publish and actively promote its use, internally and externally. We have started pushing the agenda in this direction and will continue to do so, learning from our work to date with donors, partner country governments and CSOs. Only then will the full potential of the aid transparency movement be realised.